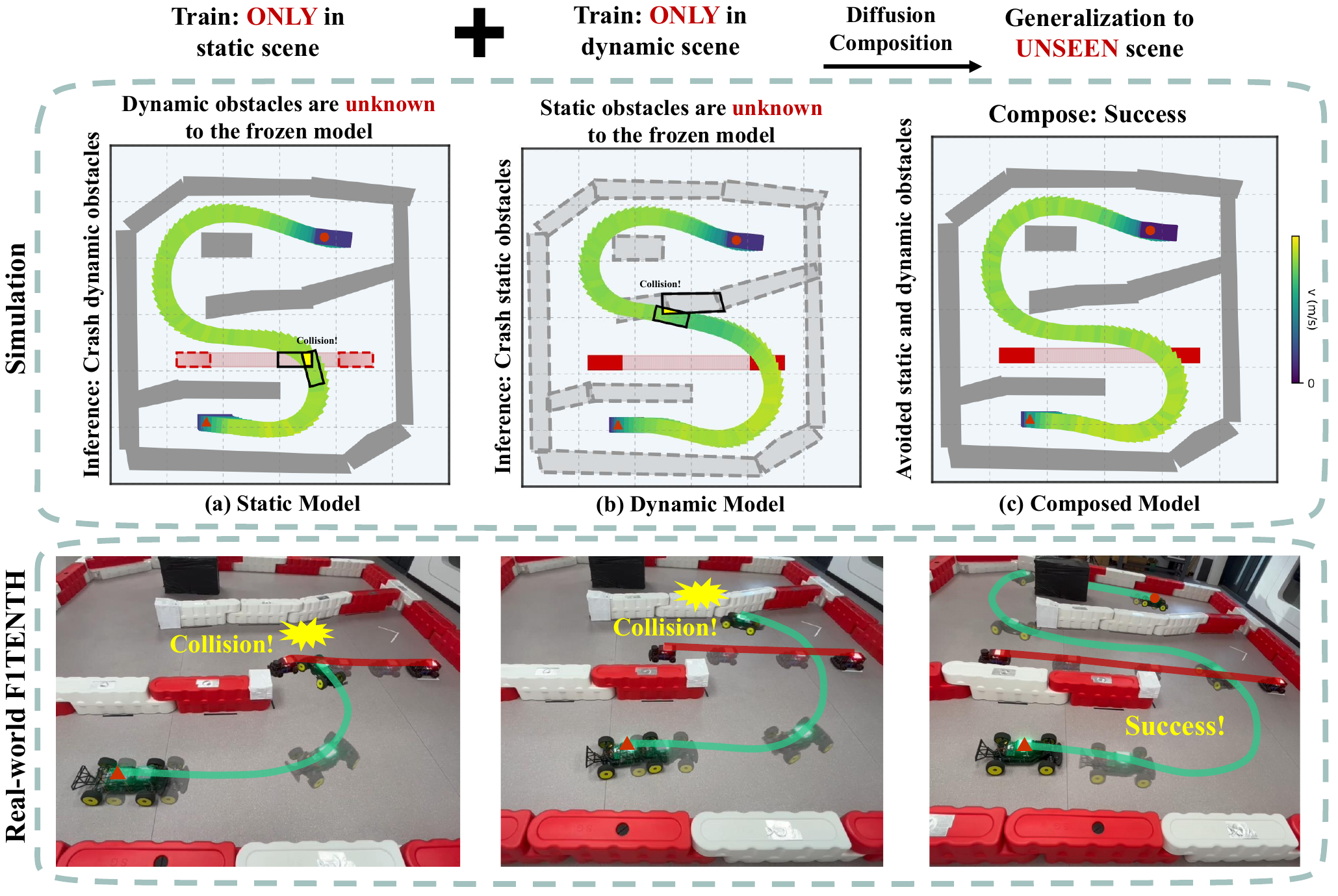

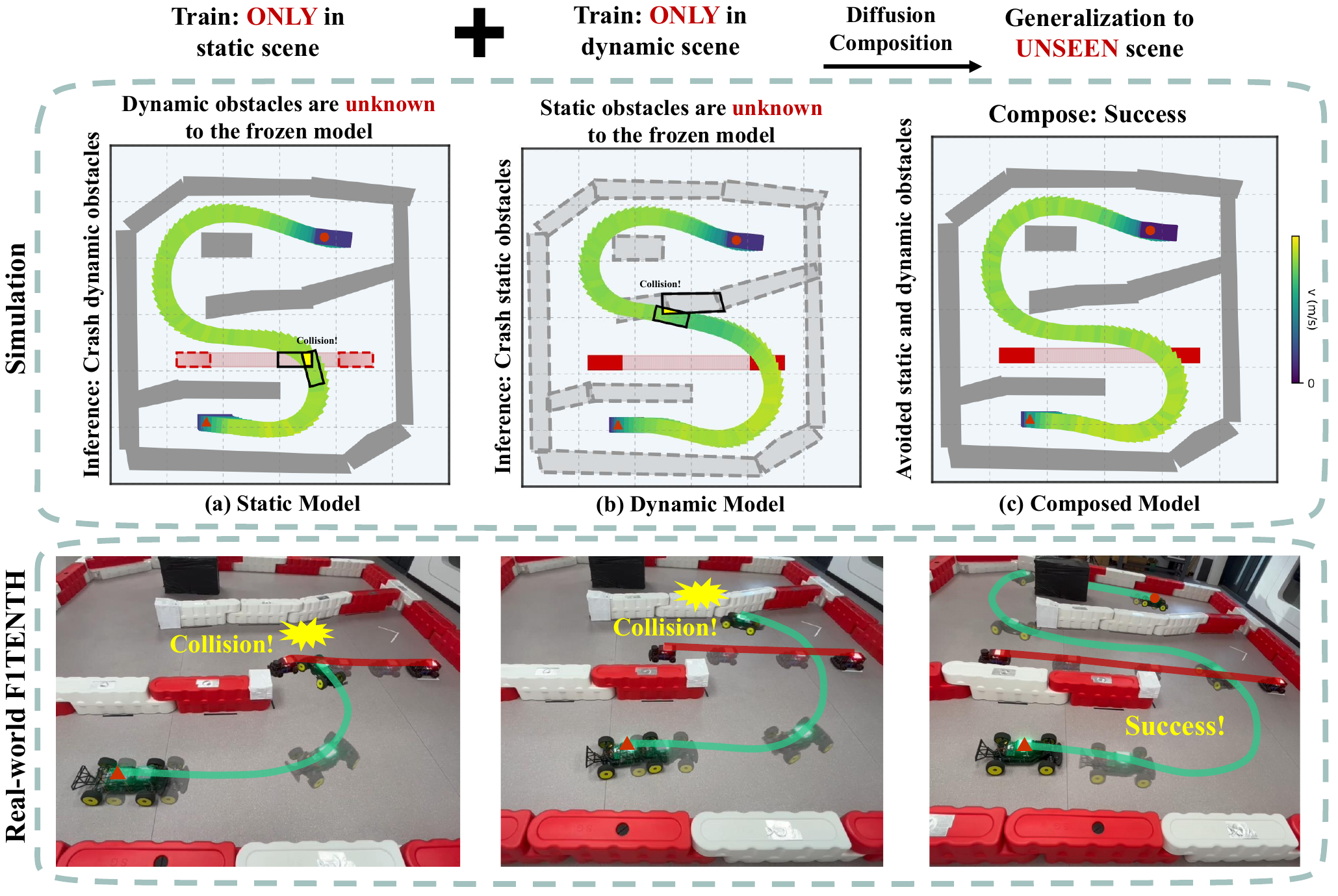

Teaser

Safe trajectory planning in complex environments must balance stringent collision avoidance with real-time efficiency, which is a long-standing challenge in robotics. In this work, we present a diffusion-based trajectory planning framework that is both rapid and safe. First, we introduce a scene-agnostic, MPC-based data generation pipeline that efficiently produces large volumes of kinematically feasible trajectories. Building on this dataset, our integrated diffusion planner maps raw onboard sensor inputs directly to kinematically feasible trajectories, enabling efficient inference while maintaining strong collision avoidance. To generalize to diverse, previously unseen scenarios, we compose diffusion models at test time, enabling safe behavior without additional training. We further propose a lightweight, rule-based safety filter that, from the candidate set, selects the trajectory meeting safety and kinematic-feasibility requirements. Across seen and unseen settings, the proposed method delivers real-time-capable inference with high safety and stability. Experiments on an F1TENTH vehicle demonstrate practicality on real hardware.

replay

replay

replay

replay

replay

replay

replay

replay

replay

replay

replay

replay

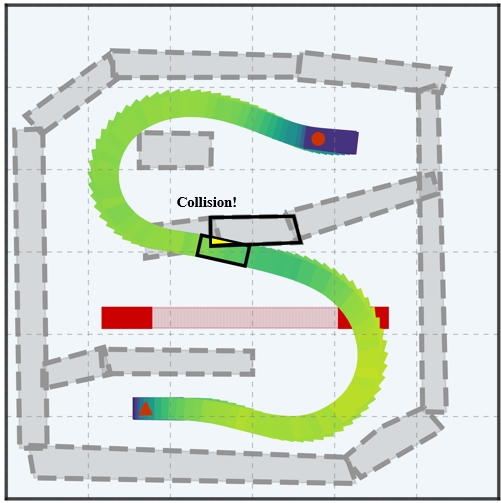

Static Diffusion Model

replay

replay

replay

replay

replay

replay

Initial Pose: [0.86, 0.03]

replay

replay

replay

replay

replay

replay

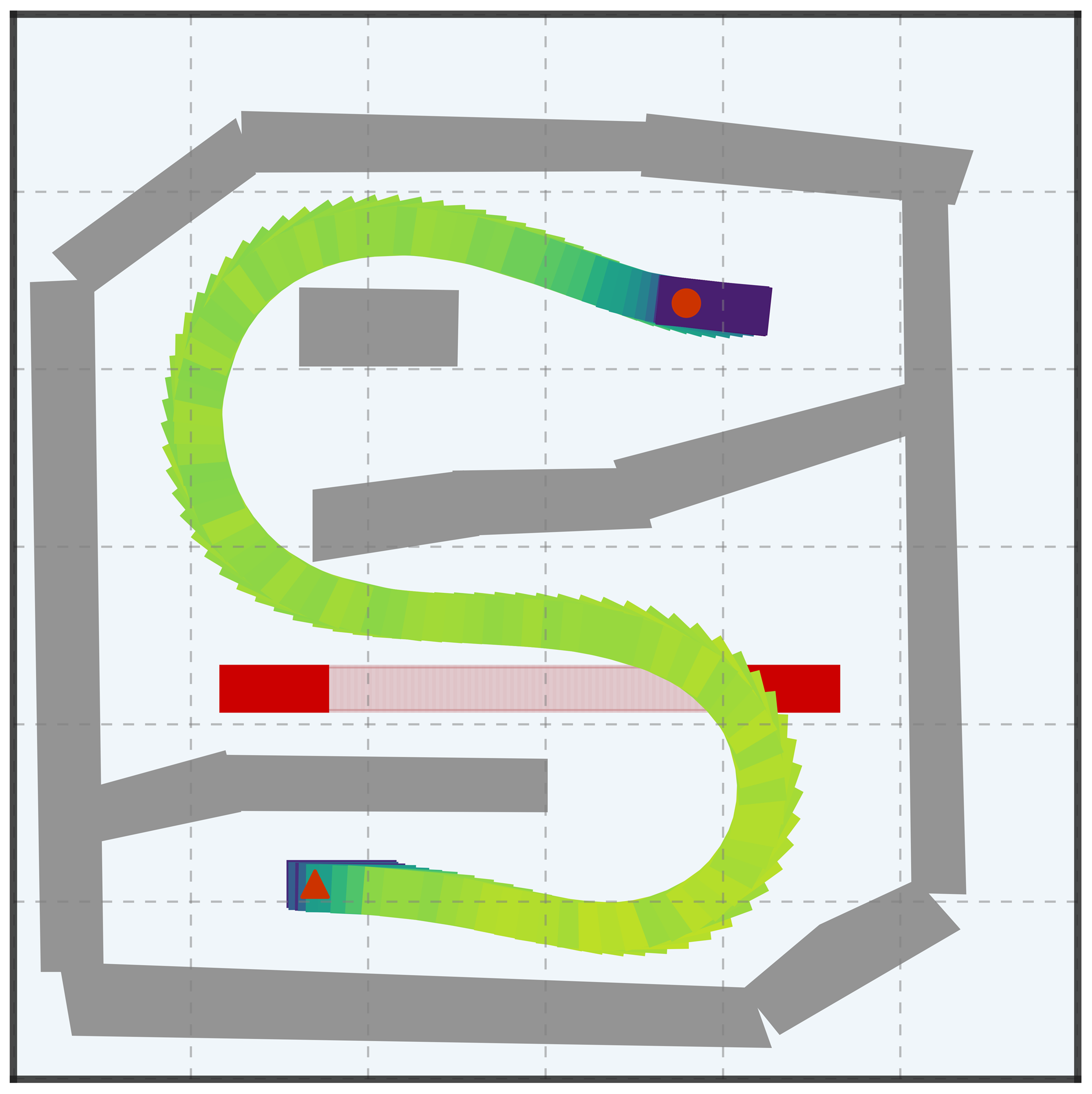

Initial Pose: [0.86, 0.03]

replay

replay

replay

replay

replay

replay

Initial Pose: [0.82, 0.07]

replay

replay

replay

replay

replay

replay

Initial Pose: [0.82, 0.07]

replay

replay

replay

replay

replay

replay

Dynamic Model